sidfaber

on 2 November 2020

Please note that this blog post has old information that may no longer be correct. We invite you to read the content as a starting point but please search for more updated information in the ROS documentation.

Kubernetes provides many critical attributes that can contribute to a robust robotics platform: isolated workloads, automated deployments, self-configuring work processes, and an infrastructure that is both declarative and immutable. However, robots designed with ROS 2 face challenges in setting up individual components on Kubernetes so that all parts smoothly work together. In this blog series, we construct a prototype ROS 2 system distributed across multiple computers using Kubernetes. Our goal is not only to provide you with a working configuration, but also to help you understand why it succeeds and overcome future design challenges.

This is the first article in a series of four posts describing ROS 2 applications on Kubernetes with MicroK8s

- Part 1 (this article): ROS 2 and Kubernetes basics

- Part 2: ROS 2 on Kubernetes: a simple talker and listener setup

- Part 3: Distribute ROS 2 across machines with Kubernetes

- Part 4: Exploring ROS 2 Kubernetes configurations

Getting into Kubernetes can be a pretty steep learning curve, so our prototype will use MicroK8s to make it easy. MicroK8s is a lightweight pure-upstream Kubernetes distribution and offers low-touch, self-healing, highly-available clusters. Its low resource footprint makes it ideal for running on robot computers. Even with very little Kubernetes experience you’ll quickly have a complete cluster up and running.

This article will introduce some of the core concepts for our prototype design. Follow-on articles instantiate a ROS 2 talker/listener prototype on a single computer, then extend the prototype with alternative options and distributing compute across multiple computers.

If you’re new to Kubernetes, refer to Kubernetes documentation and microk8s.io to get started. A background in the ROS 2 Tutorials may also be helpful as you work through these articles.

Important Terms

Wait, don't skip over this section, this is really important!

A few key terms collide when discussing ROS 2 and Kubernetes together:

Node

| In ROS, a process that performs computation. ROS nodes communicate with each other to form a graph that represents a robot control system. | In Kubernetes, a computer that runs a workload. Kubernetes nodes are combined together in a computing cluster to run processing containers. |

Throughout this article “node” will refer to Kubernetes nodes and “ROS node” will be used for robotics.

Service

| In ROS, a method to make a request from a node and wait for a reply. A service receives a formatted message from a client and sends back a reply when its task complete. | In Kubernetes, “an abstract way to expose an application”. Clients use the Kubernetes interface to locate a service which may migrate anywhere within the compute cluster. |

Throughout this article, “service” refers to ROS services. Kubernetes services will not be covered.

Namespace

| In ROS, a token prepended to a topic or service base name. Topics and services can then accessed through a relative or an absolute name. | In Kubernetes, a way to separate resources into virtual clusters running on the same physical cluster. Kubernetes uses the kube-system namespace for system infrastructure. |

Namespaces will rarely be used in this article and will be identified as belonging to ROS or Kubernetes.

The following additional terms cover a few fundamental Kubernetes concepts:

Container

Containers execute a repeatable, standardised and ready-to-run software package. The container holds all the necessary software, supporting libraries and default settings to run.

Pod

Pods deploy a workload as a group of one or more containers. Containers within a pod all run on the same host, and all containers typically start when the pod starts. Containers within a pod share access to network and storage resources.

Container Network Interface, CNI

Kubernetes uses network plugins to provide connectivity for containers. CNI plugins follow the Container Network Interface specification for interoperability.

Exploring ROS 2 networking on Kubernetes

ROS 2 operates quite well on Docker as demonstrated in the ROS Docker tutorial. However, Kubernetes may handle network communications differently from a Docker containers. In order to ensure data flows reliably between containers, we need to explore networking concepts for both ROS 2 and Kubernetes.

All ROS 2 communications use a Distributed Data Service (DDS) to create an internetworked robot control system. The associated wire protocol for DDS is Real Time Publish/Subscribe (RTPS).

ROS 2 discovery depends on multicast traffic

By default RTPS–and, by extension, ROS 2–uses UDP multicast traffic to locate domain participants. Although RTPS also supports unicast discovery, this feature has not been exposed through the ROS 2 API and depends on an implementation-specific configuration.

Multicast typically works well on a local network, but not all network devices pass multicast traffic. By default, the localhost interface on Linux will not receive multicast traffic. Routers often filter multicast traffic. Calico, the default CNI for MicroK8s, does not support multicast as of this writing.

In order to properly enable ROS 2 discovery, the prototype will configure networking so that multicast traffic flows freely between all network connections on the systems.

ROS 2 discovery contains network addresses and ports

The RTPS locator protocol used by ROS 2 contains the IP address and TCP or UDP port of DDS participants. Take a look at an excerpt of locator data for a typical RTPS datagram (this structure is defined in the RTPS specification, section 9.3.2, value type “Locator_t”):

With this data deeply embedded in RTPS traffic, communications typically fail when address or port translation happens between endpoints. The locator data points to endpoints that are not accessible to the recipient, so the receiver simply will not be able to access the service.

The prototype will be configured so that no network address translation (NAT) or port address translation (PAT) occurs between ROS nodes.

Containers within a pod communicate over the loopback interface

Within a single pod, containers can reach each other on the localhost address (127.0.0.1), and the host machine’s loopback interface is attached to each container. However, since all pods use the same actual interface, they must coordinate port usage so that no two pods communicate using the same port at the same time.

Should multiple containers within a single pod listen on the same port (for example, identical containers all hosting a web server on port 80), a Kubernetes service can be defined to expose the application and route incoming to containers. However, Kubernetes services typically perform port or address translation which, as we discussed earlier, interferes with ROS 2 communications. Kubernetes services cannot be used for ROS 2 network traffic.

Additionally, ROS 2 does not provide a method for managing ports used by RTPS. For example, a container can not change the standard RTPS discovery port of 7400, nor can a ROS 2 listener select a port other than its default. As a result, port usage can not be coordinated across multiple ROS 2 containers running in the same pod, and these containers generally will not be able to communicate.

In order to overcome the limitations in using the loopback interface with ROS 2 traffic, each pod will have no more than one container generating ROS 2 traffic.

Every Kubernetes pods gets its own IP address

The Kubernetes network model asserts that each pod receive its own IP address, and that pods within a K8s node communicate with each other without Network Address Translation (NAT).

This means that pods will be able to exchange ROS traffic so long as no other host or network configuration interferes with RTPS traffic.

Design criteria

The above analysis of network communications leads to two key design criteria for a prototype ROS 2 system running on Kubernetes.

For a given pod, only use ROS in a single container

If multiple containers in the same pod run ROS 2, network traffic collides when pods attempt to talk to each other through the loopback interface. Communications likely will be unpredictable, and multicast discovery typically will not succeed.

Pod design should follow Docker best practices for decoupling applications. Other containers within the pod can still provide back-end processing for the ROS 2 container.

Connect each pod to the host network

The host network allows traffic to flow as though ROS were running on the host, and not inside a container managed by Kubernetes. This decouples ROS 2 traffic from Kubernetes dependencies, solves issues with multicast discovery, and assigns each pod its own IP address.

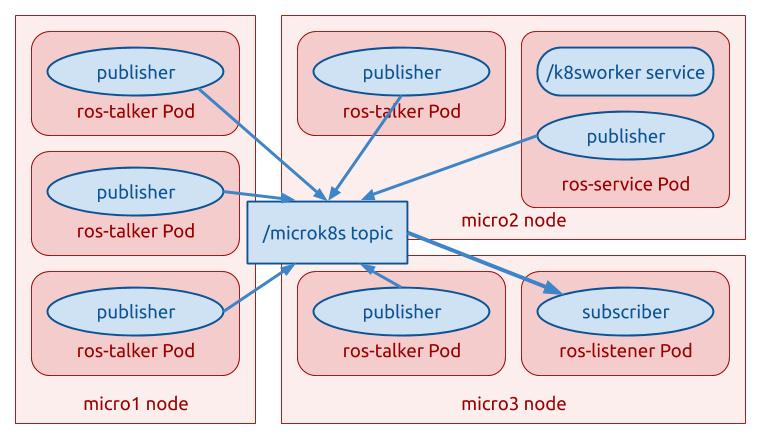

This leads to a prototype design similar to the figure below. A variable number of ROS 2 publisher, subscriber and service nodes exchange data on the /microk8s topic, and these ROS components are distributed across multiple MicroK8s nodes.

In conclusion…

This concludes the first part of this series on running ROS 2 on Kubernetes. We explored network connectivity in enough detail to create a prototype implementation. In part two we configure pods through Kubernetes deployments, and apply a prototype configuration to start up a ROS 2 system on a single node Kubernetes cluster.

For more information about Kubernetes networking, see this excellent blog post by Kevin Sookocheff.

The MicroK8s documentation covers all aspects of running MicroK8s on Linux, Windows or macOS.

To explore the possibilities of running ROS within Docker, see the Docker tutorial on the ROS Wiki.